It's easy to make lots and lots of Tableau workbooks. In fact, they tend to sprout up all over the place. This is a good thing. Tableau is most valuable when it's being thoroughly exercised, when people are putting it through its paces, exploring data, trying this and that, experimenting with approaches and techniques.

On the other hand, this very wonderfulness has its consequences and costs. This post is about one of them, and a solution to the problem that arises. (problem here in the technical sense, without any value judgement)

There are multiple connections to the same data scattered through the workbooks, and there's a business need to make sure that the data's semantics are consistently presented to the workbooks' users via informative comments on the Tableau connections' fields.

This post presents a way to make sure that all of the references to the same field in a set of workbooks have the same comments.

The short version is that it uses a standard Tableau workbook containing the appropriate comments and a Ruby script to apply those comments to the fields in other workbooks. The details follow.

The mouse pointer isn't visible in the screen caps above, but it's hovering over Continent on both cases. The one on the right shows the comment "What, no Antartica?", the one on the left has no comment.

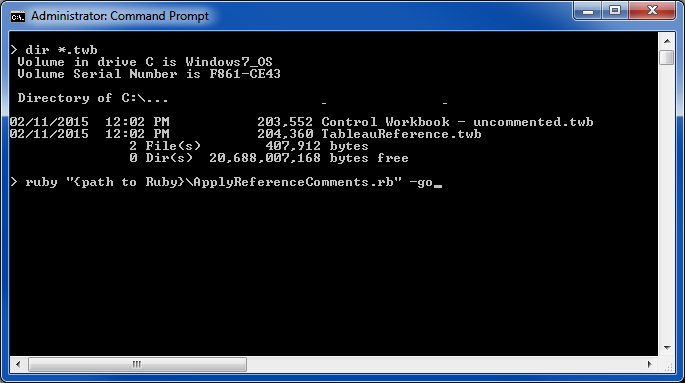

Our goal is to have the comment, and all the fields' comments from the commented connection applied to them in the other workbook. Here's the procedure:

- Rename or copy the commented workbook to "

TableauReference.twb". - Put it in the directory where the uncommented workbook(s) are.

- Have Ruby installed (directions below).

- Have the Ruby script "

ApplyReferenceComments.rb" below available—it doesn't really matter where, but it's more convenient to have it in the same directory. - Run "

ApplyReferenceComments.rb". - Watch the magic happen.

ApplyReferenceComments.rb is inline below and can be copied from there, or you can download it from here.

# ApplyReferenceComments.rb - this Ruby script Copyright 2014,5 Christopher Gerrard

require 'nokogiri'

require 'Set'

$csvFileName = 'AppliedFieldComments.csv'

$csvFile = File.open($csvFileName,'w')

$csvFile.puts 'Workbook,Data Source,Field Caption,Field Name,Field Title,Comment' unless $csvFile.nil?

$CSVHeader = <<CSVHEADER.gsub("\n",'')

Workbook,

Data Source,

Field Caption,

Field Name,

Field Title,

capComment.nil?,

nameComment.nil?,

refComment.nil?

CSVHEADER

$activityCSV = File.open('activity.csv','w')

$activityCSV.puts $CSVHeader unless $activityCSV.nil?

$errorFile = File.open('ReferenceComments.err','w')

$referenceTWB = 'TableauReference.twb'

$refFieldComments = {}

$referenceFields = File.open('ReferenceFields.csv','w')

$referenceFields.puts('Data Source,Field #,Name Type,Name,Comment')

def docUsage(arg1="",arg2="")

puts arg1

puts " USAGE:"

puts " "

puts " run: ruby ApplyReferenceComments.rb -go {twbFileSpec}"

puts " twbFile.twb - explicit file name"

puts " 'f*.twb' - name with wild card(s)"

puts " "

puts " help: ruby ApplyReferenceComments.rb -help "

puts " "

puts " tech: ruby ApplyReferenceComments.rb -tech "

puts arg2

end

def docHeader(arg1="",arg2="")

puts arg1

puts " #{$0}"

puts " "

puts " - Applying Field Comments to Tableau Workbooks, using '#{$referenceTWB}' "

puts " as the comments\' reference source."

puts arg2

end

def docNotes(arg1="",arg2="")

puts arg1

puts " help information:"

puts " "

puts " - Ruby must be in your PATH, or executed as {path}\\ruby.exe"

puts " "

puts " - This script must be in this dir, or executed as {path}\\#{$0}"

puts " "

puts " - Identifying TWBs for commenting."

puts " The TWBs to be processed may be declared on the command line after \'-go\'"

puts " "

puts " Default - all the TWBs will be processed. e.g. -go "

puts " (those not already commented)"

puts " A single TWB - can be identified by name, e.g. -go BigData.twb"

puts " the name can be quoted or not."

puts " Multiple TWBs - can be identified via wild cards, e.g. -go 'Big*.twb'"

puts " this must be quoted, if not only "

puts " the first matching TWB counts."

puts " "

puts " - Commented TWBs"

puts " TWBs that have reference comments added to any of their fields will be "

puts " saved with '.comment' added to their names, e.g.: "

puts " "

puts " BigData.twb => BigData.commented.twb "

puts " YabbaDabbaDo.twb => YabbaDabbaDo.commented.twb "

puts " "

puts " This ensures that no TWBs will be harmed during this process."

puts arg

end

def docTech(arg1="",arg2="")

puts arg1

puts " technical information:"

puts " "

puts " - Identifying Reference Comments "

puts " "

puts " - '#{$referenceTWB}'"

puts " must be present to serve as the Fields\' comments source."

puts " The reference fields' comments will be compiled from the fields found"

puts " in '#{$referenceTWB}' into a list of fields and their comments."

puts " Any problems locating or loading comments from '#{$referenceTWB}'"

puts " will raise an error message, and no TWB processing will occur."

puts " "

puts " '#{$referenceTWB}' Data Connections"

puts " - In many cases there will be a single canonical table/view used as"

puts " the source of reference comments."

puts " - However, it's possible that '#{$referenceTWB}' may contain"

puts " more than one data connection, in which case the connections will be"

puts " processed in the order in which they occur in '#{$referenceTWB}',"

puts " and this order is unpredictable."

puts " - In the case of the same field occurring in more than one reference"

puts " Data Connection, the last one wins, i.e. the last field identified"

puts " during the compilation process provides the reference comment."

puts " "

puts " - Applying Reference Comments"

puts " - Fields in the TWBs that match one of the reference fields will be provided"

puts " with the reference field's comment."

puts " - Existing field comments will be discarded."

puts " "

puts " - Field matching occurs using either of the field's names:"

puts " - Technical - the field name in the database, or "

puts " - Presentation - the 'Tableau' field name, i.e. the field has been renamed. "

puts " "

puts " Fields that reference the same database field may not have the same Tableau"

puts " name in different workbooks. This process considers that matching either the "

puts " Technicalor Presentation name identifies a candidate field as matching and "

puts " it will then be commented with the reference comment."

puts " "

puts " The candidate and reference fields' data types need not match, this is deliberate. "

puts " "

puts " - Commented fields will be recorded in #{$csvFileName}, which can "

puts " be examined (with Tableau, naturally) to see which fields have been commented."

puts arg2

end

def docCaution(arg1="",arg2="")

puts arg1

puts " USE WITH CAUTION"

puts " ---------------------------------------------------------------------------------"

puts " This process will add comments to all Db fields in the local TWBs that match the "

puts " reference fields."

puts " "

puts " This is a destructive process - any existing comments will be discarded and cannot"

puts " be recovered."

puts " "

puts " Run this process on copies of your workbooks, and check before and after running"

puts " this process to make sure that everything is as it should be."

puts arg2

end

# IMPORTANT

# Last comment wins - if more than one reference field exists with the same name

# the last one encountered will be used as the reference field

def loadReferenceComments

refTwbExists = File.file?($referenceTWB)

# puts "Reference twb Exists? :: #{refTwbExists}"

return false unless refTwbExists

doc = Nokogiri::XML(open($referenceTWB))

dataSources = doc.xpath("//workbook/datasources/datasource").to_a

dataSources.each do |ds|

dsName = ds.xpath('./@name').text

dsCap = ds.xpath('./@caption').text

dsName = if dsCap == '' then dsName else dsCap end

fieldNodes = ds.xpath('./column').to_a

fieldNodes.each do |col|

colCap = col.attr('caption')

colName = col.attr('name')

colTitle = if colCap == '' or colCap.nil? then colName else colCap end

comment = col.at_xpath('./desc')

recordReference(dsName,colCap,colName,comment) unless comment.nil?

end

end

puts " - Reference fields loaded.\n\n"

return refTwbExists

end

$refFieldNum = 0

def recordReference(dsName,colCap,colName,comment)

return if comment.nil?

# --

$refFieldComments[colName.upcase] = comment unless colName.nil? or colName == ''

$refFieldComments[colCap] = comment unless colCap.nil? or colCap == ''

# --

text = comment.text.gsub(/[ ]*\n[ ]*/, ' ').gsub(',', ' ').gsub(/\t/, ' ')

$refFieldNum += 1

$referenceFields.puts("#{dsName},#{$refFieldNum},Caption,#{colCap},#{text}")

$referenceFields.puts("#{dsName},#{$refFieldNum},Name,#{colName},#{text}")

$referenceFields.puts("#{dsName},#{$refFieldNum},Name - UC,#{colName.upcase},#{text}")

end

def addComment(colNode, commentNode)

# Tableau only recognizes the first node as a comment so this

# method removes any/all existing nodes before adding the new one

descNodes = colNode.xpath('desc')

descNodes.each { |dNode| dNode.remove }

colNode << commentNode

return true

end

def writeTWB(doc, twb)

commentedTWB = twb.sub(/.twb$/,'.commented.twb')

newTwb = File.open("#{commentedTWB}",'w')

newTwb.puts(doc)

newTwb.close

puts " #{commentedTWB}"

end

# These are the standard data Tableau column attributes for the Teradata

# types identifed by inspection in the TWB metadata-record elements.

# This script is written to handle Teradata sources, may need adjustment

# for other data sources.

$mdRecProps = {

'date' => {'datatype' => 'real' , 'role' => 'measure' , 'type' => 'quantitative' },

'integer' => {'datatype' => 'integer', 'role' => 'measure' , 'type' => 'quantitative' },

'real' => {'datatype' => 'real' , 'role' => 'measure' , 'type' => 'quantitative' },

'string' => {'datatype' => 'string' , 'role' => 'dimension', 'type' => 'nominal' }

}

def getMetadataRecordFields(twb, doc, dsName, dataSource, twbFields)

mdFields = dataSource.xpath('./connection/metadata-records/metadata-record[@class="column"]')

mdFields.each do |f|

localName = f.at_xpath('local-name').text

seen = twbFields.include?(localName)

if !seen then

refComment = $refFieldComments[localName.upcase] # - get a reference comment for the column name, if any

if !refComment.nil? then

type = f.at_xpath('local-type').text

attrs = $mdRecProps[type]

if !attrs.nil? then

colNode = Nokogiri::XML::Node.new('column', doc)

colNode.set_attribute('name',localName)

attrs.each do |attr, value|

colNode.set_attribute(attr, value)

end

colNode << refComment

dataSource << colNode

else

$errorFile.print "ERROR getMetadataRecordFields - no attributes found for metadata-record:"

$errorFile.puts "'#{localName}' in dataSource:'#{dsName}' in TWB:'#{twb}'"

end

end

end

# puts

end

end

# ====================== /resolving metadata, non-column fields ======================================

def processTWB twb

twbFields = Set.new # use to keep track of fields found, by their technical/DB names

return if twb =~ /.*[.]commented.twb$/ # don't process already processed TWBs

puts "\n - #{twb}"

doc = Nokogiri::XML(open(twb))

dataSources = doc.xpath("//workbook/datasources/datasource").to_a

# puts " - loaded"

isCommented = false

dataSources.each do |ds|

dsName = ds.xpath('./@name').text

dsCap = ds.xpath('./@caption').text

dsName = if dsCap == '' then dsName else dsCap end

# puts " - DS: #{dsName}"

fieldNodes = ds.xpath('./column').to_a

fieldNodes.each do |col|

colCap = col.attr('caption')

colName = col.attr('name')

colTitle = if colCap == '' or colCap.nil? then colName else colCap end

# puts " - col: #{colName}"

twbFields.add(colName) # register seen Columns for ref during handling

capComment = $refFieldComments[colCap]

nameComment = $refFieldComments[colName.upcase]

refComment = !capComment.nil? ? capComment : nameComment

$activityCSV.print "#{twb},#{dsName},#{colCap},#{colName},#{colTitle}"

$activityCSV.puts ",#{capComment.nil?},#{nameComment.nil?},#{refComment.nil?}"

unless refComment.nil? then

# puts " - comm:\t #{colName} "

isCommented = addComment(col,refComment)

if isCommented then

twbFields.add colName

title = colTitle.sub(/^\[/,'').sub(/\]$/,'')

comment = refComment.text.gsub(/[ ]*\n[ ]*/, ' ').gsub(',', ' ').gsub(/\t/, ' ')

$csvFile.puts "\"#{twb}\",\"#{dsName}\",#{colCap},#{colName},\"#{title}\",\"#{comment}\""

end

end

end

getMetadataRecordFields(twb, doc, dsName, ds, twbFields)

end

# puts "\n\n===="

# twbFields.each { |f| puts f }

writeTWB(doc, twb) if isCommented

end

def process(arg1='*.twb')

docHeader "\n\n\n"

if loadReferenceComments then

puts "\n\n Processing TWBs named '#{arg1}'...\n"

Dir.glob(arg1) { |twb| processTWB twb unless twb == $referenceTWB }

$csvFile.close unless $csvFile.nil?

puts "\n\n Done.\n\n\n"

puts "\n That's all, Folks.\n\n"

else

puts " ERROR"

puts " ---------------------------------------------------------------\n"

puts " Either:"

puts " - The Comments Reference TWB: #{$referenceTWB} is not present."

puts " or "

puts " - A problem occurred loading the reference comments from it."

end

end

def usage

docHeader "\n\n\n"

docUsage "\n\n\n", "\n\n\n"

end

def help

docHeader "\n\n"

docUsage "\n"

docNotes "\n\n"

docCaution "\n\n", "\n\n\n"

end

def tech

docHeader "\n\n"

docUsage "\n"

docNotes "\n\n"

docTech "\n"

docCaution "\n", "\n\n\n"

end

system "cls"

# what to do?

case ARGV[0]

when /[-]+[g][o]?$/i

ARGV[1].nil? ? process : process(ARGV[1])

when /[-]+(h|help)$/i

help

when /[-]+(t|tech)$/i

tech

else

usage

end

#$csvFile.close unless $csvFile.nil?

Notice that running "ApplyReferenceComments.rb" requires using "-go" on the command line. Not providing "-go" will cause "ApplyReferenceComments.rb" to provide usage information, including how to see help and technical information. These are shown below.

Note that whenever a Workbook is found ApplyReferenceComments.rb lists it, and then if there are any comments added to it the original Workbook is saved with ".commented" appended to its name. This ensures that the original TWB is left unchanged.

A record of the comments that are applied is kept as data in the CSV file "AppliedFieldComments.csv". Here's an image of a Tableau workbook showing the results of the scenario above.

There's only one Workbook and one Data Source, so they're shown in the sheet's title do the sheet can be narrow enough to display the whole worksheet.

This script has worked well for my situation and needs.

It may or may not work as well for you in yours.

There are no guarantees that it will do anything useful.

Rumor has it that Tableau has told one Tableau tools vendor that Workbook XML will not change in any ways to break existing tools and utilities. This would be good, there's been no public commitment to this that I know of and Tableau 9 could break everything.

These utilities evolve fairly rapidly as needs and conditions change. I envourage you to take this code and use it as the basis of your work, and hope that if you do you'll leave a comment and a link to your new stuff. The more tools the community creates the better for all of us.

I'm very interested in hearing about any experiences with this, good and bad. Please leave a comment if you give it a try.